It’s fair to say that talk of artificial intelligence (AI) has increased dramatically over the last 12 months.

Looking at Google Trends, talk about AI in the UK is around 5x higher today than it was at the same time in March of 2022, and around 3x higher internationally. There are ongoing conversations around the implications for workers, artists, and creatives, and the ethics of outsourcing to a computer program trained on the work of others without permission or compensation.

Simple AI chatbots have been used for years by companies who want a way for customers to get in touch, 24 hours a day, 7 days a week – however the recent evolution of AI technology has resulted in an avalanche of new and potentially dangerous ways for AI to be abused.

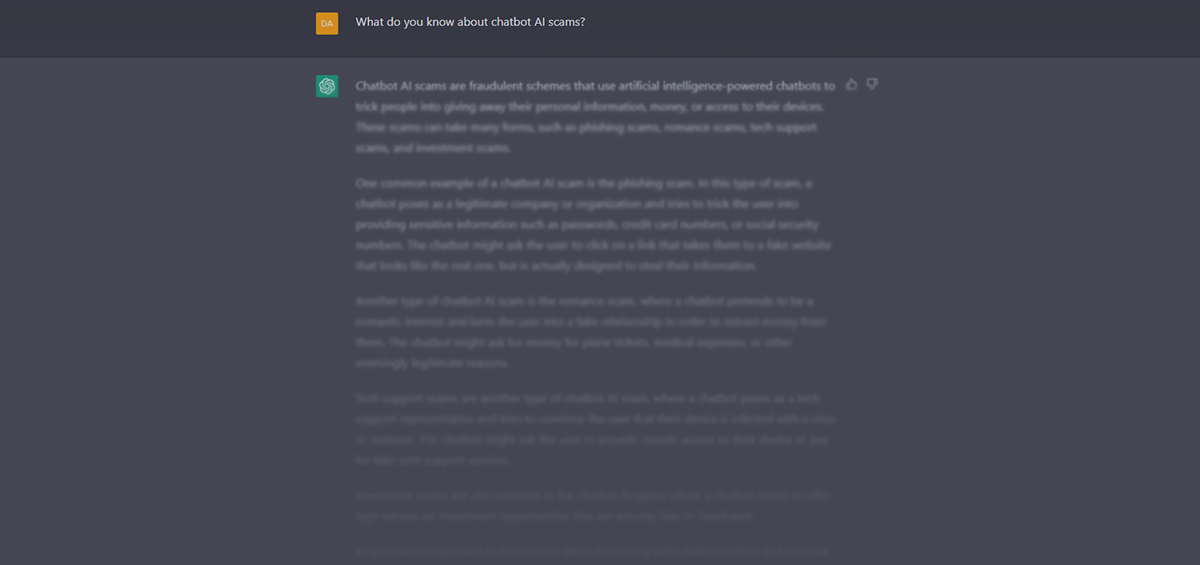

What are AI chatbot scams?

AI chatbot scams are, perhaps predictably, a scam involving an AI chatbot. At CEL Solicitors we help fraud and scam victims regain their lost money on a daily basis after being targeted by criminals. For the most part, these criminals can only target a handful of people at a time.

As an example, the infamous Tinder Swindler – Simon Leviev – manipulated multiple women into fuelling his jet-setting lifestyle. Simon leveraged money from each of his victims simultaneously to create the illusion of a wealthy diamond mogul. Despite this, he was only one man, which meant he could only target a limited number of people at any one time.

In the age of AI, chatbots are advanced enough to pass as humans to those unaware, tech-savvy con artists who can and are using these tools to commit fraud on a grand scale. They are potentially able to fool and defraud thousands of people at the same time – and they can do this over the course of many weeks if not months with relative ease.

How do Chatbot AI scams work?

Chatbot AI scams work in much the same way as traditional online scams, whether that be investment, romance or impersonation scams.

They typically start with the fraudster reaching out to their target while pretending to be somebody they’re not. This could be an interested swipe on a dating app, a message on social media, or a supposed recruiter for an investment company offering a too-good-to-miss opportunity.

The only difference in the case of an AI chatbot scam is that the person you think you are talking to may not even be real. AI chatbots already have the ability to retain information and can answer subsequent questions in a logical and intuitive way – and they can do this while “talking” to thousands of people at the same time.

If you have spent any time at all talking to the ChatGPT bot, Google’s Bard, or Microsoft’s new Bing AI, you will have found that, for the most part, they are able to hold fairly substantial conversations in a frighteningly realistic way.

In a recent report, Europol, the European Union Agency for Law Enforcement Cooperation, warned:

“[It] is now possible to impersonate an organisation or individual in a highly realistic manner even with only a basic grasp of the English language.

Critically, the context of the phishing email can be adapted easily depending on the needs of the threat actor, ranging from fraudulent investment opportunities to business e-mail compromise and CEO fraud. ChatGPT may therefore offer criminals new opportunities, especially for crimes involving social engineering, given its abilities to respond to messages in context and adopt a specific writing style. Additionally, various types of online fraud can be given added legitimacy by using ChatGPT to generate fake social media engagement, for instance to promote a fraudulent investment offer.”

How to avoid chatbot AI scams

The best ways to avoid an AI chatbot scam are much the same as how we would advise you to avoid regular person-to-person scams.

Always be wary of messages from people you don’t know

If you are contacted out of the blue, online, by a person you haven’t met or spoken with before then be careful. They will likely start the conversation in a way that feels genuine and may not make any suspicious moves against you for days if not weeks or months. This is known as a “long con”.

Never click on links sent by somebody you don’t know. This applies as much to messages via email as it does via direct messages on social media, or on a dating app.

You should be especially wary if the person is asking you to download software, particularly if the person is requesting you use screen-sharing software like AnyDesk, TeamViewer or LogMeIn which allows them to see or control your screen. Never download a program from a person you do not know.

Search for the person online

Look online to see if the person you are contacting has other social media platforms. Perhaps they have a LinkedIn which lists their workplace and colleagues. Maybe they have an active Facebook or Instagram they have been using for years which you can use to confirm they are who they say they are.

Searching for the person online may also reveal other victims who have been targeted by the fraudster, as was the case with Simon Leviev.

Be suspicious of their pictures

If all of the images on the account look suspiciously similar or look edited, then they may have been manipulated by an AI bot. Image-generating AI can create incredibly realistic-looking pictures and selfies of basically anybody in seconds, so it’s vital you inspect the pictures for inconsistencies.

Perhaps a birthmark or their eye colour changes between pictures. Image-generating AI also currently has an issue replicating realistic-looking hands, and very often generated hands with an incorrect number of fingers.

Are they unable to show their face on video?

Romance scammers (also known as Catfishes) have been using the excuse that their “webcam is broken” for decades, and yet it’s still a go-to excuse fraudsters use in order to not show their true identity. In the case of AI chatbot scams, this is because they don’t have a real face to show.

One thing to be wary of as technology continues to develop is that fraudsters may use deepfake technology to create realistic-looking recreations of real people in order to fool victims.

Never give out your personal information

Again, this piece of advice applies as much to human scammers as it does to virtual AI scammers, but it’s so important that it’s still worth covering.

You should never give out your personal information to somebody you have never met. This doesn’t mean that you can’t tell somebody your name, or that you live in London, especially if you’re talking on a dating app, but it does mean that you should be careful about what you reveal, especially if it involves financial or banking information.

If they are particularly interested in what you do for work, how much you earn, if you invest, or if you are having money issues, these are all major red flags that something may be wrong.

At CEL Solicitors we often find that fraudsters start out by contacting their targets on dating apps, pretending to be an interested romantic partner, and then eventually turn the conversation to, or recommend, an investment opportunity where you can make money.

They can’t meet in person

If you have been speaking with a person you have met online for a while, you will naturally want to meet in person. If they claim they are unable to meet face-to-face, or they keep putting it off with excuses then this may be a red flag. If they are a chatbot AI, then they will never be able to meet in real life, because they do not exist.

There is no getting away from the fact that technology is advancing at an ever-increasing rate. Microsoft co-founder Bill Gates claims that AI is the “most important tech advance in decades” and that it will “change the way people work, learn, travel, get health care, and communicate with each other”.

As with any advance in technology, there will be people who misuse and take advantage for their own benefit, regardless of who they hurt. This is why it’s so important that people are as prepared as possible and that help, and support are available for those unfortunate enough to be targeted.